Your web browser is out of date. Update your browser for more security, speed and the best experience on this site.

We need to talk about AI

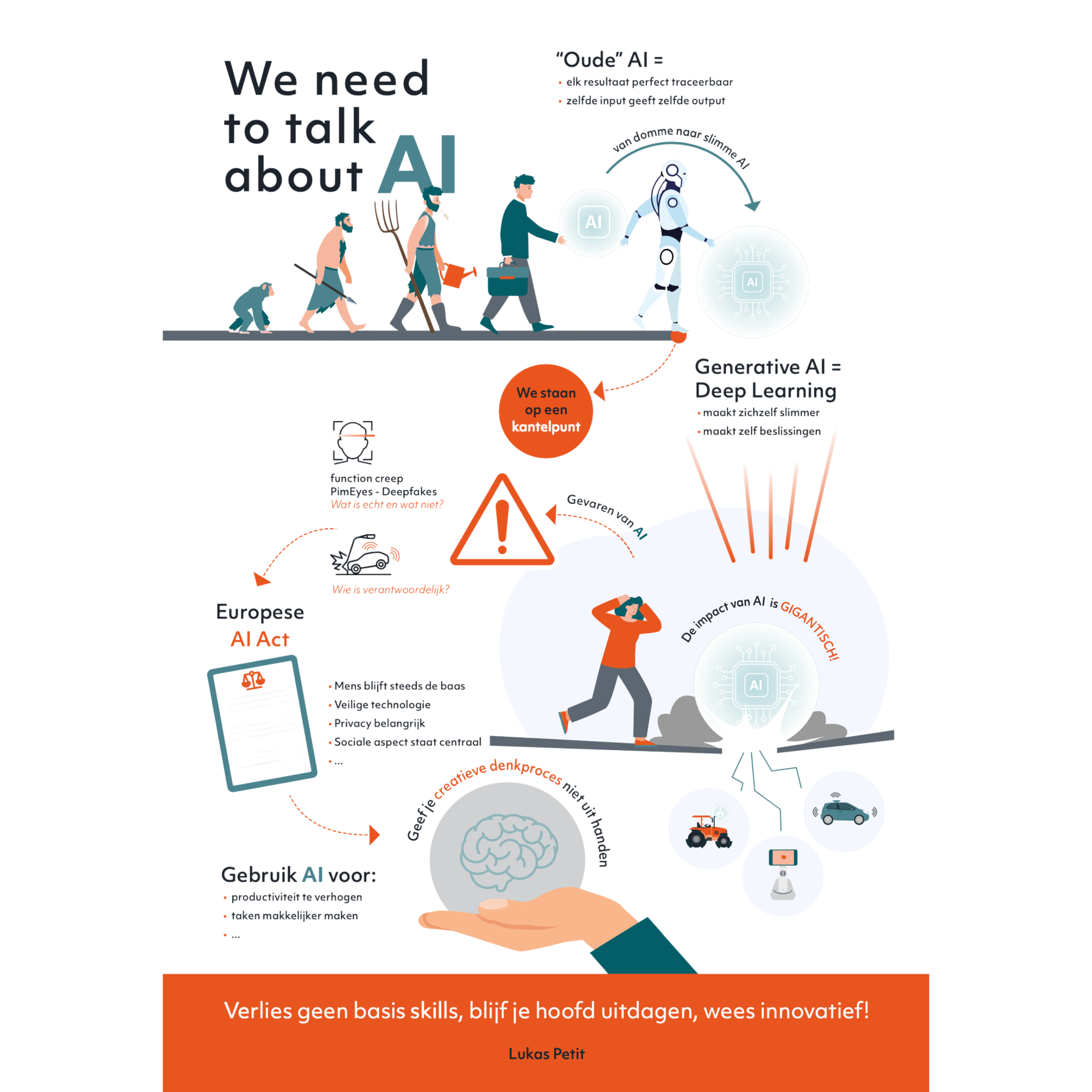

Now that AI has broken through to the general public over the past year, we need to think more than ever about the advantages and disadvantages of the technology. That's according to Lukas Petit, a consultant at Axxes.

Human evolution is unstoppable. We have come a long way and are far from reaching the finish line. AI will inevitably be part of our lives. It is important to handle this very consciously. Understanding the dangers and addressing them is essential. This way, we can harness the benefits of AI while remaining in control as humans.

The human race consists of revolutions.

In his book "Sapiens: A Brief History of Humankind," historian Yuval Noah Harari outlines how humans evolved in three major steps. The first phase, he argues, was the cognitive revolution that began 70,000 years ago. We transitioned from apes to humans and started pondering the meaning of life. Concepts such as justice and religion emerged, and Homo sapiens decided to leave the continent of Africa. As we expanded our habitat, Homo sapiens encountered the physically stronger Neanderthals, but we were able to defeat them through cooperation and our more developed brains. Emancipation ensured our survival. The cognitive revolution was followed by the agricultural revolution (10,000 B.C.), which occurred when we shifted from hunting to farming and abandoned the nomadic lifestyle for settled communities.

Many centuries later, about five hundred years ago, the scientific revolution followed. Great thinkers like Newton and Copernicus forever changed the sciences, and groundbreaking inventions ushered in a new era. European imperialism mapped the world and led to the formation of gigantic empires.

The most recent change is the second cognitive revolution of the 1950s. Scientists began to analyze the human psyche, and philosophical thinking surged. Artificial intelligence emerged as a concept, but it has only become fully integrated into society thanks to technological innovations of recent years.

In each phase of history, humans were confronted with technological progress, shaping the way we live today. Those who did not embrace these revolutions/evolutions were doomed to be left behind.

From dumb to smart AI

In his presentation at our Haxx conference, consultant Lukas Petit delved deep into AI, the ability of a machine to exhibit human-like skills. Software can reason, learn, and plan, or even be creative.

Thanks to machine learning, technology can make decisions based on patterns and data. Since the advent of generative AI, this has mainly been done through deep learning, where the program makes itself smarter and, above all, is capable of making decisions on its own. This is the big difference from "old" AI. With generative AI, every result was perfectly traceable, and the same input would always yield the same output. This is no longer the case.

"We are at a tipping point. Many things are changing, and we will witness many more evolutions," Lukas said. The impact of AI on various sectors is enormous. Think of healthcare, where applications like Barco Demetra help doctors detect skin cancer more efficiently through image recognition. In agriculture, players like John Deere's tractors can recognize weeds, and self-driving cars theoretically know how to obey traffic rules. "Companies that don't jump on the bandwagon now are missing out," Lukas concluded. "I believe we should embrace AI and look for the benefits to assist us. Whether we like it or not, technology has a significant impact on our daily lives."

The dangers of AI

Along with this tremendous evolution, what generative AI represents, there are, of course, also drawbacks - according to doomsayers, the end of the world is even near. According to Lukas, it won't come to that extreme, but there are several aspects we need to consider. "There's a lot to consider when collecting and using data. Has everyone given permission for programs to be trained on their data? Who owns that data? What sources do systems like ChatGPT use? These are just some of the questions I'm concerned about."

On the surface, seemingly innocent data from one application can also be used for something completely different - the so-called function creep. Sometimes it can start quite innocently, as Lukas demonstrated with an example. "What if your insurer offers a discount in a few years if they get access to your Strava account? It can start very sympathetically but can also go too far. Before you know it, you might not get life insurance because you don't exercise enough."

The same image recognition that can spot skin cancer or weeds can also be used to recognize and categorize people using facial recognition. It powers stalker software like PimEyes and can be the basis for deepfakes. "The line between truth and something that looks like it is getting thinner," Lukas said. "Fake news makes it easy to manipulate people, but even apart from that, there is little transparency. How do we know, for example, if there are no companies that manipulate the results on platforms like ChatGPT by downplaying certain negative information about them in responses?"

And then there are many ethical questions, revolving around the responsibility of the companies that create and use such algorithms. Whose fault is it when a self-driving car causes an accident? Who owns sensitive data? How do we secure systems against hackers? And how do we avoid biases because algorithms are trained on data that already contains certain biases and may misclassify people or objects?

The European AI Act

Fortunately, there are laws and guidelines that answer many of those questions. For example, on June 15, 2023, the European Parliament approved guidelines on AI, making it the first legislative body worldwide to do so. It's a global first, but at the same time, critics argue that the laws come hopelessly late. Artificial intelligence evolves so rapidly that the legislative framework will always be outdated.

"At first, AI will be divided into different categories based on the impact the technology has. Some things are very innocent, such as an algorithm used in a spam filter or video game. Chatbots and banking apps, on the other hand, must be more transparent about how they use AI. The rules for self-driving cars and robotic surgery are even stricter. Additionally, some applications are outright banned, such as the social rating systems we know from China."

The European legislation is based on several important pillars that directly address the dangers Lukas mentioned in his presentation. Human control must always prevail, and the technology must be robust and safe. Privacy and data governance play a role, and companies must be transparent about, among other things, the diversity in data sources. The social aspect must always be central, and individuals must be designated as responsible.

"In the first instance, AI will be categorized into different categories based on the impact the technology has. Some things are very innocuous, such as an algorithm used in a spam filter or video game. However, chatbots and banking apps must be more transparent about how they utilize AI. The regulations for self-driving cars and robotic surgery are even more stringent. Additionally, some applications are outright prohibited, such as the social rating systems we know from China."

The European legislation is based on several important principles that directly address the dangers Lukas mentioned in his presentation. Firstly, human control must always prevail, and the technology must be robust and secure. Privacy and data governance play a role, and companies must be transparent about, among other things, the diversity in data sources. The social aspect must always be central, and individuals must be designated as responsible.

Don't lose basic skills, keep challenging your mind, and stay innovative!

Apart from areas where regulation can provide solutions, Lukas has other questions he wants to ponder. "We are losing many basic skills by surrendering our thinking process. If we let everything be spoon-fed to us, we can no longer innovate. Tools like Copilot may make us work faster, but not necessarily better. However, as developers and as humans, it's crucial to know what we're doing. Problem-solving thinking is essential for our cognitive evolution and invaluable in daily life. Artificial intelligence will undoubtedly increase our productivity and make many tasks easier. As humans, we must handle this consciously. We should not relinquish our creative thinking process and basic skills. These abilities must be constantly utilized, and we should actively compel ourselves not to always take the quickest path. Only then can the evolution of humanity continue."

Monthly our Insights in your mailbox?

Lukas Petit